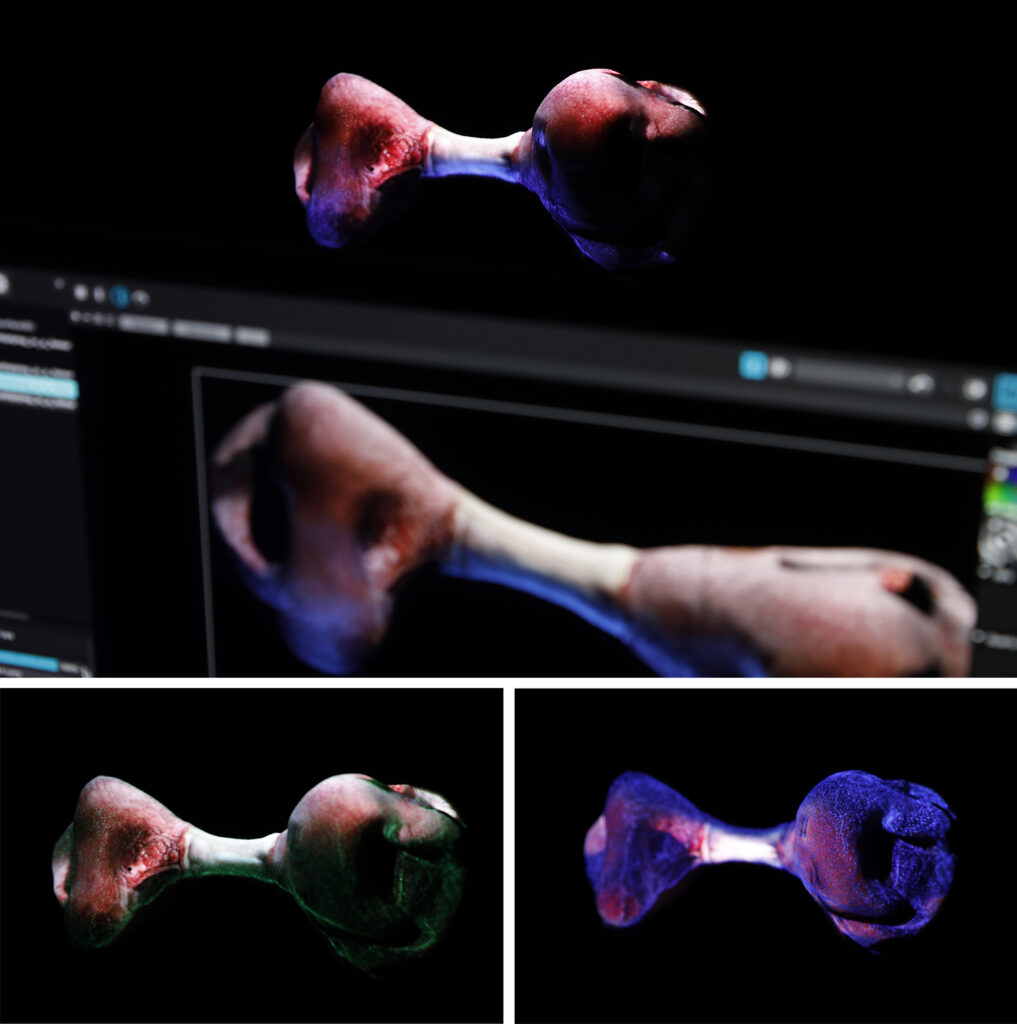

-Title: AI Onco Sculptures

-Medium: Augmented AI Generated color 3D powder Print

-Size:7″ W x 6″ H x 6 D

-Year of completion: 2024- In Process

“AI Onco-Sculptures” is an art experiment in AI-generated art based on the theme of cancer, specifically bone cancer.

On 2020, I was diagnosed with pleomorphic bone sarcoma in my left femur and had to undergo an extensive diagnostic process, chemotherapy, and radiotherapy treatment. By the end of 2020, I was cancer-free, but my left hip, femur, and knee were replaced with a bone endoprosthesis.

My personal experience with bone cancer informs this project on many levels, from the data used to generate the initial 3D models to the further manipulation and 3D fabrication of these models.

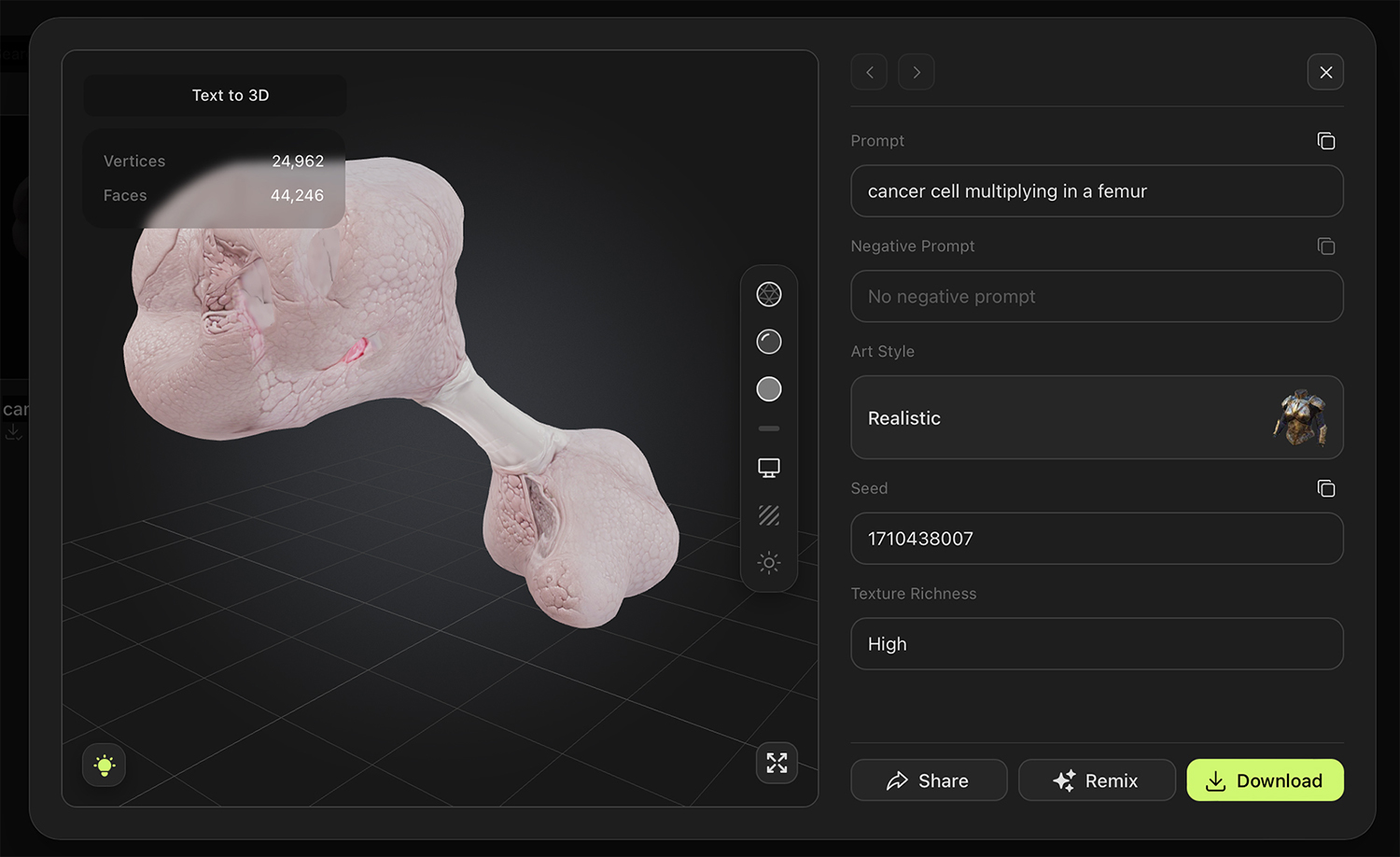

I am currently exploring two routes of AI generative experimentation: text prompt to 3D object and AI-generated image to 3D object

Once a 3D model is selected, I use an n-polygon and subdivision surface modeling program to enhance some features of the model, combine it with other models, and prepare them for optimal printing.

Once the models are 3D printed, I project moving images onto them using 3D videomapping techniques. Additionally sometimes I light the cavities in the models using controllable Neopixels embedded in the models. The projected images and lighting patterns respond to the passive and active interaction of visitors through touch capacitance sensors installed on the surface of the object.

The materials I am experimenting ise VisiJet PXL combined with 4-channel CMYK full-color binder (powder printing) and I use a Projet660 powder print

First step: Generation of a 3D model using a text prompt (“cancer cell multiplying in a femur”). Several iteration cycles and selection processes were conducted before reaching this model.

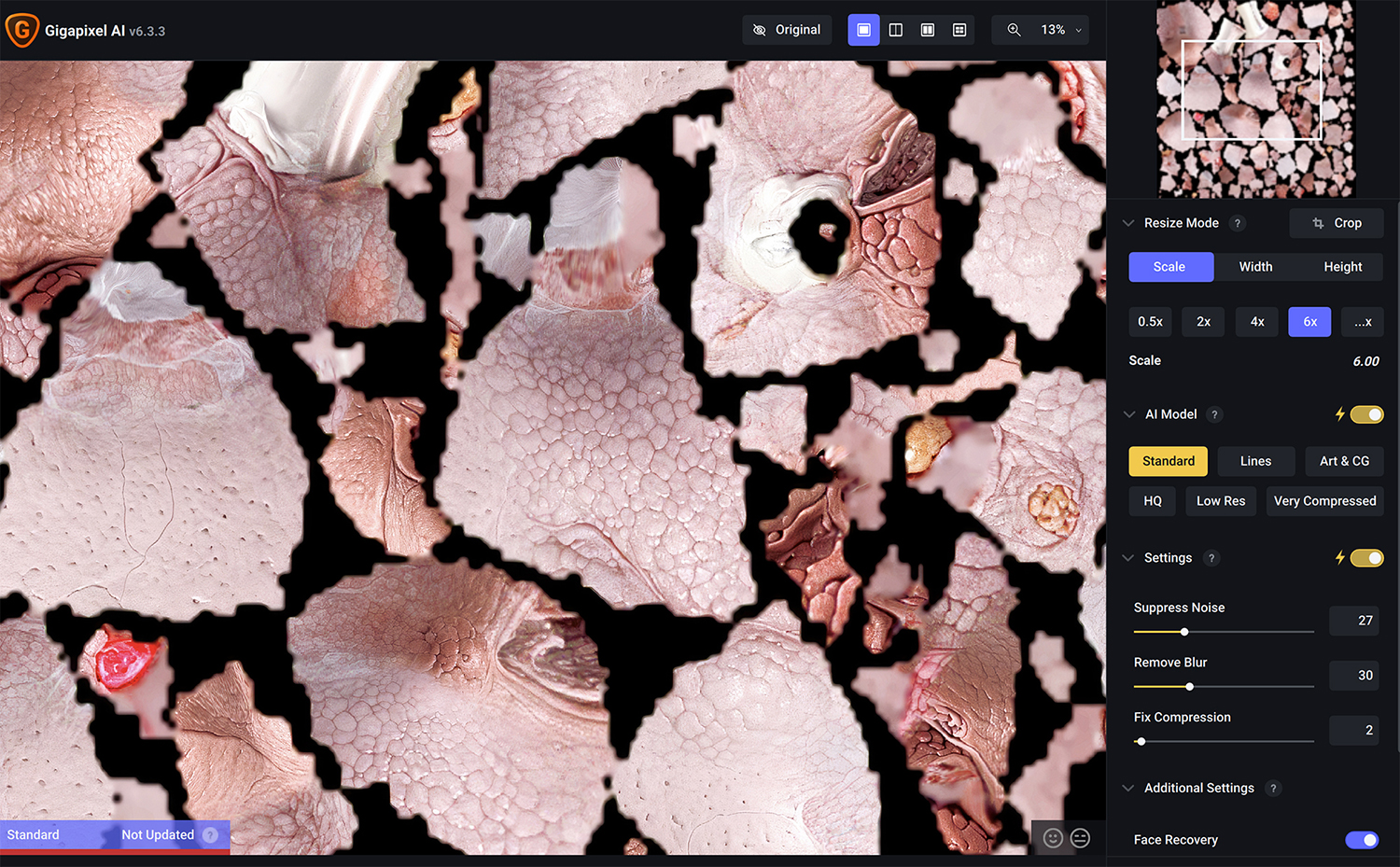

Second step: Enhancement and optimization of the UV map texture for 3D printing using Gigapixel AI and other image editing software

Fifth Step: Adding projected media and interactivity.

To create the augmented 3D printed sculptures, I’m installing touch capacitance sensors in the sculptures. When a visitor touches the sculpture, a series of moving patterns are projected onto the sculptures. I’m using MadMapper to generate the video mapping content and an Optoma Short Throw projector ML1050ST.